Dreamglass by Mattress Firm

A fun interactive experience to create intrigue made by Microsoft for Mattress Firm.

Showcased at the LA Car Show 2021 and Houston Rodeo 2022.

Made with Unity and Azure Kinect

My role: User experience design, Unity development and body tracking data processing

Experience overview

The experience was deployed at trade shows and exhibtions. Members of the public were invited to participate, providing a focal point and allowing potential customers to engage with the brand.

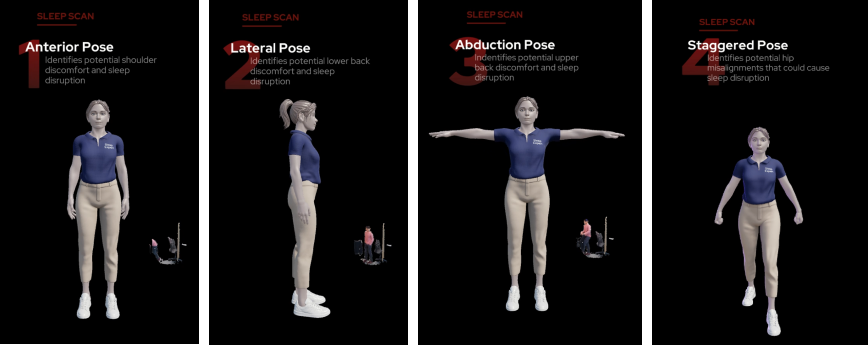

The experience involved asking users to make postures which are analysed using body tracking algorithms, courtesy of the Azure Kinect.

Working with the Azure Kinect

The Azure Kinect device provides poses of 32 joints in the skeleton. Specific joints of interest are used to calculate joint angles using vector arithmetic.

For example, during a T-pose, the neck, shoulder, elbow and wrist joints form a picture of the angle from shoulder to torso. This information is compared with opposing side of the body for discrepancies.

I iterated through several ideas on how to provide a user with a pose assessment. I worked with Mattress Firm's innovation team and used their physio's recommendations on making localised angle comparisons between bones and joints.

Working with the magic mirror

As your reflection features heavily in the experience, a mirror was a natural fit. For kerb appeal, the mirror + Kinect combi offers a novel experience for users (members of the public). On the technical side, for providing visual feedback on your current pose, the speed of light beats a USB camera (Azure Kinect).

There were design considerations around using the mirror, however. Such as lining up the reflection with the UI, deciding where to draw the user's focus and rendering that would compliment a variety of lighting conditions. Unlike an LCD screen, we can't display black.

User input

The experience was used in public settings and to maximise accessibility, there are no peripheral devices required.

So how does the user navigate the experience?

The Azure Kinect has a depth sensor, so we know far the user is. When the user stands between a certain distance away we can start the experience

There are moments that require binary input - for this we can ask the user to raise their left or right hand. In code, that's a simple dot product calculation between the left/right arm joints and a Vector.left/Vector.right.